Researcher in the Spotlight: Bob Hendrikx

Within the FAST project, my topic is localization and navigation for mobile industrial robots.

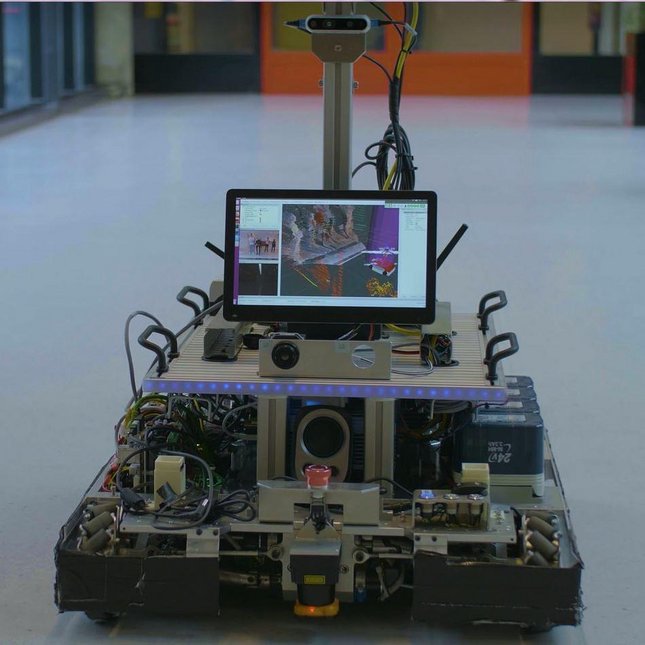

Hi, my name is Bob Hendrikx and I’m working in the Control Systems Technology research group of the Department of Mechanical Engineering. Within the FAST (new Frontiers in Autonomous Systems Technology) project, my topic is localization and navigation for mobile industrial robots. The goal is to make robot behavior robust to changes in the environment by letting them ‘understand’ their surroundings as much as possible.

Looking for landmarks

Enabling robots to explain what they are doing is a key step forward for localization and navigation. The current generation of mobile robots require environments that do not change much from their initial state or have special infrastructure, such as inductive strips or beacons. To help them understand their surroundings, we use a semantic world representation that allows them to relate sensor information to concepts and instances in an explainable and extendable way. We achieve this by making explicit data associations with objects in the real world (landmarks) and maintaining these over a time horizon.

When the robot is unsure about a data association (“is this really the object I think it is on my map?”), it may add the association as a hypothesis. It can then use this information to reason as to whether it has sufficient information to perform its next navigation action or if it has to find better landmarks. We’re building a software system that enables this through the updating of a shared ‘local world model’ around the robot using various plugins.

Programming intuition

Managing the complexity of this software and orchestrating the individual algorithms within the architecture pose a challenge. A robot is a complex system in which many sensors must work together to come to an estimation of what the world looks like. This estimation has to be able to recover from wrong hypotheses originating from noisy sensor data or false assumptions. Furthermore, these sensors produce large amounts of data, and the robot’s software architecture needs to facilitate all the planning, perception and control in a modular and explainable way.

By tackling these challenges, this research will be important to anyone who is interested in robot navigation in industrial settings in which a-priori maps can be made but environments are dynamic and cluttered. Because of the focus on explainability, the resulting robot behavior will be more predictable and intuitive, which will benefit anyone present in the robot’s workspace.

BRINGING IT ALL TOGETHER

Within the FAST project, we all work together extensively. We plan to demonstrate all our research together in one software stack that can be deployed in various robots. The reasoning capabilities that Hao-Liang Chen’s research will provide and the human-aware navigation skills that Margot Neggers will develop will be intertwined with the general navigation and localization stack that Liang and I are working on. This will be essential to the explainability of the robots’ behavior.

We are currently testing the first demonstrator of the new software stack within the university environment. A number of Master’s students have also demonstrated some of the concepts within the companies taking part in the FAST project. The ultimate aim is robots that can support humans in tasks that are tedious, repetitive or dangerous to execute.