Researcher in the Spotlight: Hao Liang Chen

Making mobile robots more explainable and robust in semi-open environments

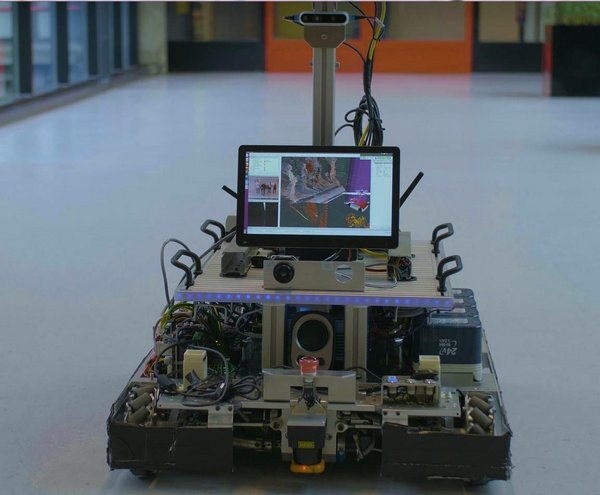

My name is Hao Liang Chen and my research topic focuses on making mobile robots more explainable and robust in semi-open environments, i.e. structured environments in which we know what kind of disturbances we can expect but where the exact realization of these disturbances is unknown in advance. This research takes place in the Control Systems Technology research group of the Department of Mechanical Engineering.

NEW COMBINATION OF KNOWLEGDES Optimum actuator forces

An example of a semi-open environment would be a robot driving through a corridor, in which the structured environment is represented by static walls (and doors) and the disturbances by (vague) notions like ‘doors being opened’, ‘people walking around’ or ‘boxes being put on the ground’. My approach is to give the robot some common-sense knowledge to allow it to deduce its desired behavior, e.g. “walls are solid, hence I cannot go through them”. A step further is to combine pieces of knowledge in order to deduce the context of a situation. For example, pieces of knowledge like “my name is being called”, “the person calling me is looking at me” and “the person is carrying something” may be combined to deduce that a person is calling the robot to help them with something.

The challenge, meanwhile, is determining what kind of knowledge to give the robot. One could, for example, give the knowledge “you should go to location A”, but the pitfall is that this cannot really be reused for other applications nor is it explainable. Why should the robot go to location A? A better approach is to decompose the knowledge into two pieces, like “I need to do action X” and “location A allows me to perform action X.” Via these pieces of knowledge, the robot can deduce that it should go to location A.

MOVING BEYOND 'IF-ELSE'

As robots become more present in our daily lives, they need to be able to deal with all the possible variations in our environments. Giving robots some common-sense knowledge allows them to better integrate into our society and facilitates situations in which robots may cooperate with humans. This research is therefore interesting for people who want to deploy mobile robots in semi-open environments in which they also want the robot to be able to explain/understand why it did something in a certain way. One shouldn’t need to pre-program every action sequence the robot could take in an ‘if-else’ fashion – due to the many variations possible in the real world, we also hypothesize that it’s impossible to pre-program everything.

THE BUILDING BLOCKS OF SELF-AWARENESS

In this project, I work with two other PhD students and one postdoc student. One of the PhD students, Bob Hendrikx, focuses more on the localization and world modeling aspects, i.e. giving the robot the tools for some sense of awareness. I will then provide the reasoning aspect to let the robot itself determine how to use these tools. Another PhD student, Margot Neggers, focuses on human-robot interactions, providing me with knowledge of how the robot should behave in the presence of humans. The reasoning aspect of the robot should then use this knowledge to adjust behavior. The postdoc student, Liang Li, focuses more on the learning aspect of this project, so we can collaborate in the future on how the robot should learn new knowledge. As for now, I’m busy implementing and testing my approach in a use-case.